Executive Summary

CloudTalk’s Product Marketing and GTM teams conducted three field experiments to understand how AI-driven voice technology improves efficiency across the sales and customer experience lifecycle. Each experiment explored a different stage of the funnel: inbound, long-tail qualification, and post-webinar engagement.

Across all tests, one pattern emerged: AI consistently increased the number of live conversations, reduced operational bottlenecks, and uncovered opportunities that would have been missed. These opportunities include leads that were never contacted, inbound calls that went unanswered, prospects who never responded to email.

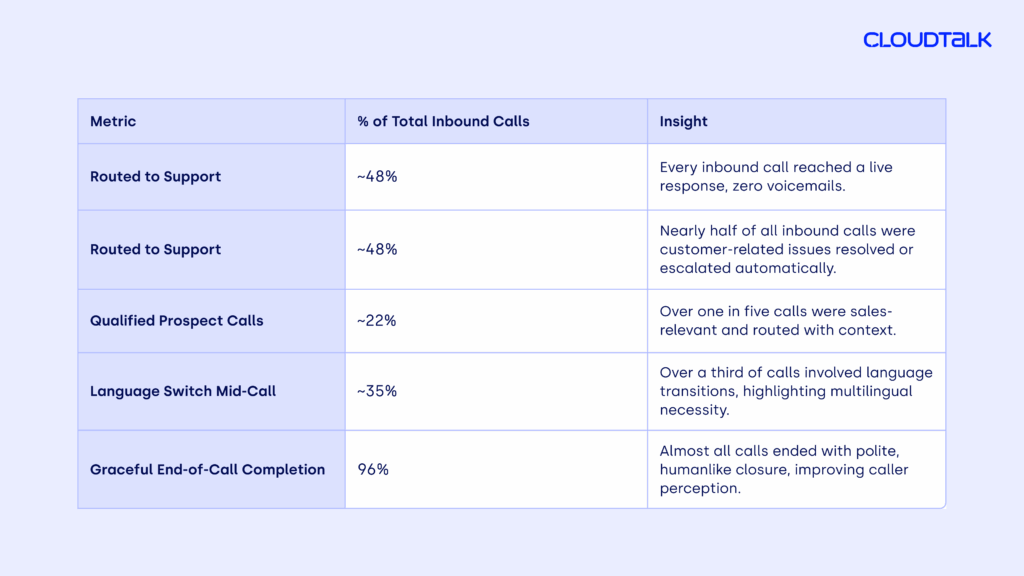

In the inbound experiment, a 24/7 AI receptionist answered every call coming through website and campaign numbers. The system handled 100% of inbound calls, routed 48% of them to support, identified 22% as qualified prospects, and ended 96% of conversations gracefully, eliminating the voicemail dead end and improving contextfor human teams.ere…

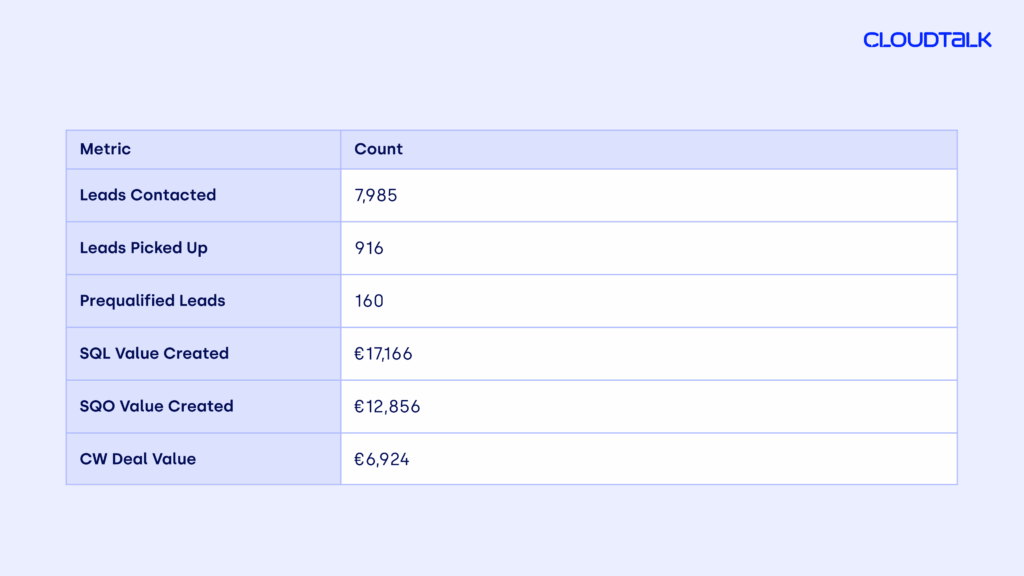

The long-tail qualification experiment tested whether AI could work an untouched segment of 7,985 leads. The agent contacted 916 and prequalified 160, creating close to 80 SQLs. The results show that AI makes it economically viable to work segments that would otherwise be too small or low-intent for human teams to prioritize.

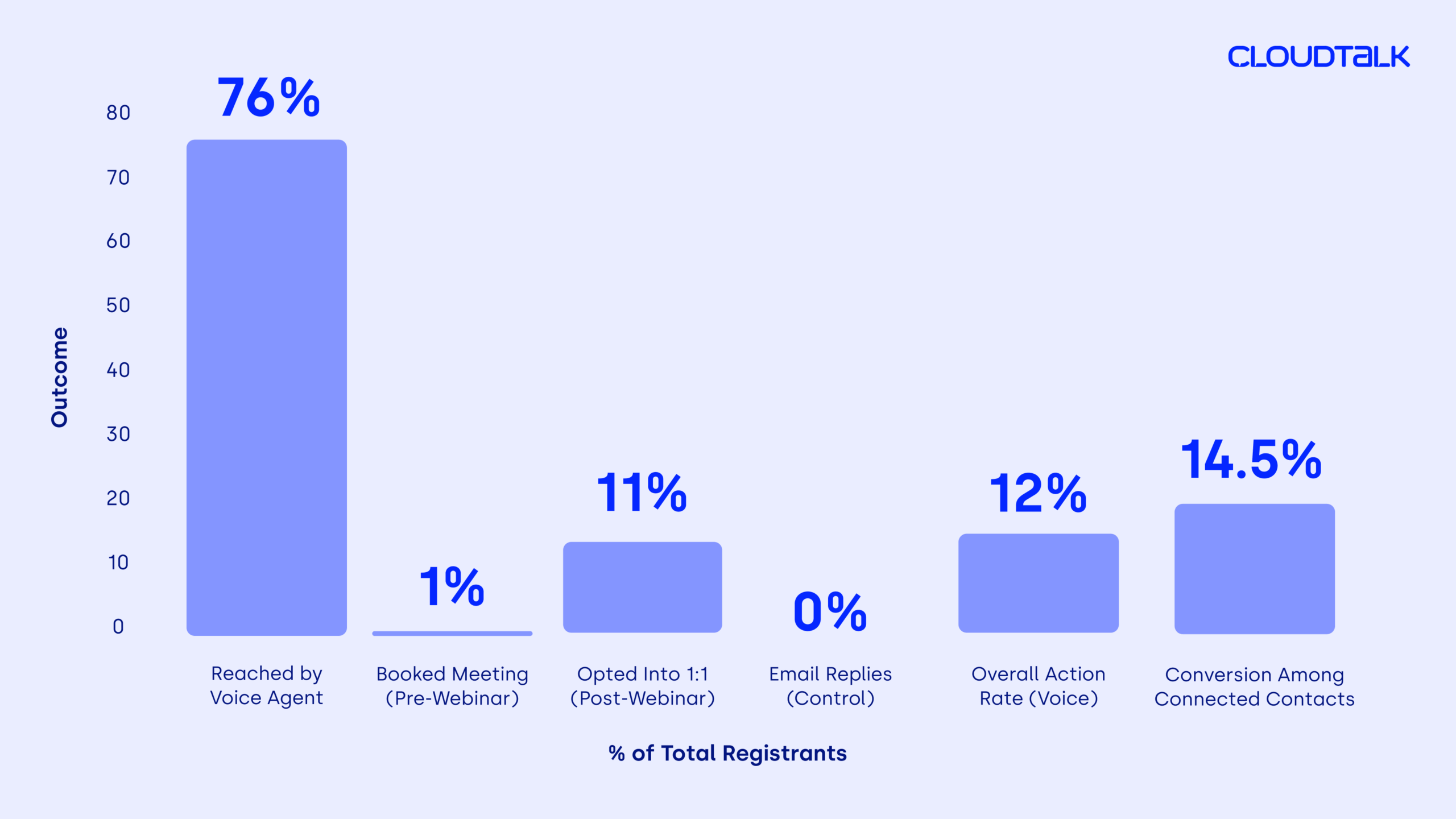

In the post-webinar follow-up experiment,an AI Voice Agent replaced traditional email outreach. While email generated 0% replies,the voice agent achieved a 12% overall action rate, with 11% of registrants opting intoa 1:1 conversation and even booking meetings before the webinar took place. Live voice conversations clearly outperformedpassive channels.

These experiments demonstrate that AIdoes not replace human touch; it expands its reach, increases capacity, and ensures that more conversations happen across the customer journey.

Introduction

Why We Ran These Experiments

Sales and support teams regularly suffer from limited time, high volumes of inbound and outbound calls, missed calls, ignored emails, and slow or delayed follow-up.

AI tools promise to solve many of these challenges, but to validate that, we need studies with results that are applicable to real-world situations. Unfortunately, most of the available data comes from controlled environments or simulated conversations, not from real interactions with customers.

To understand the practical impact of conversational AI, CloudTalk’s Product Marketing team designed three field experiments across different stages of the revenue funnel. Each experiment testeda simple question: How does AI perform when it’s placed directly into real workflows,with real leads, prospects, and customers?

Instead of focusing on automation for its own sake, the goal was to observe how AI changes connection rates, improves coverage, affects user experience, and reshapes the economics of engagement. These experiments were intentionally lightweight, fast to deploy, and grounded in real operational scenarios, providing insight into not what AI could do, but what it actually delivers in practice.

The following report consolidates these findings, highlights the patterns that emerged, and outlines what they mean for sales, marketing, and CX teams moving forward.

About CloudTalk

CloudTalk is an AI-powered businessphone platform designed for salesand support teams.

Founded in 2016, the company has evolved from a simple click-to-call tool into a global communication system used by more than 4,000 businesses across 100+ countries.

CloudTalk’s platform includes AI VoiceAgents, AI-powered dialing, conversation intelligence, workflow automation, and advanced call routing tools tailored for customer-facing teams.

With more than 140 employees across 20+ nationalities, CloudTalk focuses on improving customer conversations through scalable, reliable voice technology.

The company’s mission is to build the world’s most capable AI calling software, helping teams connect with customers more efficiently and deliver exceptional customer experiences.

EXPERIMENT 1: 24/7 Inbound Voice Agent

The Always-On Inbound Line: How AI Voice Agents Transform Missed Calls into Engagement

Key Takeaways

Replacing voicemail with a 24/7 AI receptionist ensured every inbound call was answered, tracked, and followed up.

With 100% of calls answered, multilingual handling, and 96% of conversations ending gracefully, meaning they concluded with polite, helpful closings rather than abrupt hang-ups, the experiment showed that AI can reliably cover the gaps humans can’t, like after-hours, busy periods, and missed notifications.

With an AI Voice Agent, every call becomes an opportunity to support, qualify, or route, proving that always-on voice coverage is now operationally achievable without additional headcount.

Executive Summary

In most organizations, inbound phone numbers are underutilized assets. Calls are missed after hours, during high-volume periods, or when routing fails, leading to lost opportunities and customer frustration.

This field experiment tested whether an AI Voice Agent, trained with curated company knowledge and multilingual logic, could autonomously handle inbound calls at any hour.

The outcome was clear: AI Voice Agents can effectively answer 100% of inbound calls, route nearly half to support or escalation, and engage over one in five as qualified prospects, converting a static phone number into an always-o n engagement channel.

Methodology

Objective:

To determine whether a 24/7 AI Voice Agent could replace voicemail,reduce missed calls, and improve both lead capture and customer experience.

Scope:

-

Inbound calls from website and ad campaigns

-

Multilingual agent deployment (language detection and switching)

-

Knowledge base derived from company web content

-

Control group: previous voicemail/unanswered setup

Setup Process:

Knowledge Curation

-

Instead of dense internal documentation, content was extracted from live website pages using Screaming Frog.

-

ChatGPT was then used to restructure it into conversational summaries and tiered responses.

-

The resulting dataset functioned as a “knowledge layer” capable of responding to pricing, features, or support-related questions.

Agent Design

-

Greeting: Open-ended question (“Are you looking for product info, support help, or something else today?”)

-

Intent handling: Sales, support, spam, and unclear intents branched dynamically

-

Multilingual response: Seamless mid-call switching between languages

-

Graceful termination logic: Humanlike closings or fallback routing based on confidence levels

Control

-

Prior to deployment, 60–70% of inbound calls during off-hours were missed or went unanswered.

-

The experiment replaced this system entirely with 24/7 coverage.

Results

Analysis & Interpretation

-

01

From Missed Calls to Managed ConversationsThe biggest insight is operational: “missed calls” are no longer an inevitability.

By replacing voicemail with an autonomous, responsive agent, organizations can now maintain full-time engagement coverage, converting downtime into interaction time.

This reframes the business phone number not as a static touchpoint, but as a living inbound system capable of contextually responding to customer needs 24/7. -

02

Content Readiness Is a Hidden AssetThe experiment showed that most companies already possess 90% of what an AI agent needs to perform well, it’s just not structured conversationally. By extracting website and FAQ content and reformulating it into dialogic responses, teams can deploy voice AI without overhauling documentation or creating new assets. In short: the value isn’t in writing new scripts, but in translating static content intointeractive language.

-

03

Multilingual Handling Is No Longer OptionalMore than one-third of inbound calls switched languages mid-conversation.This highlights a crucial shift: multilingual responsiveness isn’t a premium feature, it’s a baseline expectation for any business with an international web presence. Companies that fail to support multiple languages risk silent attrition from interested but linguistically mismatched callers.

-

04

Graceful Exit Logic Redefines Caller ExperienceOne overlooked success factor was end-of-call design.

By scripting how the AI ends conversations, thanking users, escalating intelligently, or gracefully disengaging, the agent avoided the “robotic hang-up” moment that often undermines trust.This created continuity between automation and human support, preserving brand tone even in fully automated exchanges. -

05

The Economics of Always-On EngagementFrom an operational perspective, 24/7 voice coverage without human staffing changes the economics of inbound support. Each call answered by AI represents potential cost savings on missed opportunities and lower inbound handling overhead. The experiment demonstrates that AI Voice Agents extend capacity, not headcount, allowing teams to scale service coverage without proportional labor expansion.

“Missed calls are no longer inevitable. AI Voice Agents answered 100% of inbound calls and resolved or routed nearly half—without adding headcount.”

Strategic Implications

Limitations

The experiment’s performance metrics represent early deployment data. Real-world variation (seasonality, marketing volume, product maturity) may affect scalability. Additionally, while STT (speech-to-text) and intent detection performed reliably, complex emotional or multi-intent interactions still required human takeover, an area for future improvement.

Conclusion

This experiment shows that AI Voice Agents can transform inbound call handlingfrom reactive to proactive.

By answering 100% of calls, maintaining multilingual fluency, and closing 96% of conversations gracefully, the system proved that

automation and empathy can coexist in voice interactions.

EXPERIMENT 2: Voice Agent for Long-Tail Leads

Voice Agents and the Long Tail: How AI Qualification Unlocked Hidden Pipeline

Key Takeaways

The long tail isn’t “low value”, it’s low-touch, and that’s a solvable problem.

By surfacing 70 SQLs from a pool of leads that would not otherwise have been contacted, the Voice Agent proved that AI can economically unlock segments humans don’t have time to pursue.

The lesson: when qualification cost approaches zero, every lead becomes worth engaging, and dormant pipeline becomes a measurable revenue channel.

Executive Summary

A recent field experiment tested whether a conversational AI Voice Agent could effectively qualify low-priority or “long tail” leads, the kind that rarely receive human follow-up due to low intent, small deal size, or geographic mismatch.

The findings suggest a fundamental shift in how sales organizations can scale efficiently:

Methodology

Objective:

To evaluate whether a trained Voice Agent could autonomously call, qualify, and surface viable sales opportunities from previously untouched leads.

Data Set:

-

7,985 total leads

-

Prioritized low-intent sources, small trial accounts (1–2 users),and out-of-region contacts

-

None had prior sales engagement

Agent Design:

The Voice Agent was prompted to:

-

Ask about team size, call needs, and purchase urgency

-

Detect buying signals and disqualify non-relevant leads

-

Route qualified leads automatically to CRM for rep follow-up

The experiment focused on conversational efficiency, qualification accuracy, and opportunity yield, not speed or automation volume alone.

Results

The voice agent achieved an 11.47% connect rate across untouched leads and prequalified 17.47% of those who answered. The result was more than 70 sales-qualified leads from a segment that would probably never have been reached.

Analysis & Interpretation

-

01

AI Revealed a Hidden Layer of PipelineTraditional sales models optimize for average deal size and conversion probability, which often excludes small accounts or low-intent leads. However, this study suggests that “long tail” segments contain real revenue potential, provided they can be reached and qualified at near-zero marginal cost.

In this experiment, the voice agent called leads the team had never contacted before. About 11.47% picked up the call, and of those, 17.47% were qualified as MQLs. This resulted in more than 70 sales-qualified leads, without requiring any extra work from the sales team. -

02

The Voice Channel Outperforms Passive OutreachMany of the same leads had ignored email and web nurture campaigns, yet engaged with a phone call. This aligns with a growing industry observation: voice remains the most attention-capturing channel, even in low-intent segments. The Voice Agent’s humanlike tone and short call duration (typically under two minutes) provided just enough friction to engage curiosity without creating resistance.

-

03

Human Oversight Becomes a Scalability LeverRather than replacing reps, the Voice Agent acted as a filtering mechanism, creating a cleaner, smaller, and more actionable pipeline. The 160 prequalified contacts were tagged, segmented, and enriched automatically before reaching a human. This not only reduced pipeline clutter but also elevated the quality of human selling time, shifting reps from “prospecting” to “closing.”

-

04

Data Quality and Conversational Design Drive AccuracyVoice AI performance hinges on prompt logic and data integrity. The experiment succeeded because it combined structured logic trees (for routing and disqualification) with flexiblenatural language handling. In practical terms, this means the AI wasn’t just reading a script,it was following a guided reasoning path designed to extract the minimum viable insight from each call.

-

05

The Economics of “Micro Deals” Are ChangingHistorically, small accounts (1–2 seats) couldn’t justify rep attention. But with Voice Agents handling qualification autonomously, the cost of discovery drops dramatically.That changes the economics of customer acquisition:

Every ignored segment becomes a testable market once qualification cost approaches zero.

Implications for Sales Organizations

“When qualification cost drops to zero, every lead becomes worth engaging. AI made dormant pipeline visible—and valuable.”

Limitations

While the sample size was large, the study represents a single dataset from one business context. Variables like brand familiarity, region, and product complexity may influence replicability. Additionally, the long-term retention or expansion potential of “micro deals” remains untested.

Conclusion

This experiment illustrates that AI Voice Agents can transform dormant lead segments into measurable revenue channels, not by replacing human sales, but by expanding where sales can economically operate.

By automating initial qualification across thousands of low-intent leads, the Voice Agent surfaced 70 sales qualified leads and multiple closed deals from accounts that would otherwise have been ignored.

EXPERIMENT 3: Webinar Follow-Up (Voice vs. Email)

Voice Over Email: How AI Calls Outperformed Traditional Webinar Follow-Ups

Key Takeaways

Voice outperformed email by a factor of twelve, demonstrating that real-time, conversational follow-up converts interest into action far more effectively than passive channels. With 12% of registrants taking action and 14.5% conversion among connected contacts, the AI agent turned a traditionally low-engagement workflow into a live dialogue. The insight is clear: when timing and tone align, AI-powered voice becomes a high-impact extension of event marketing, bridging the gap between registration and real engagement.

Executive Summary

A controlled experiment tested whether AI-powered voice follow-up could outperform traditional email in engaging webinar registrants. Using an AI Voice Agent instead of a standard email workflow, the experiment reached 76% of registrants, booked 1% of meetings pre-webinar, and generated 11% post-webinar opt-ins for one-on-one conversations. In total, the voice-driven approach achieved a 12% overall action rate compared to 0% via email, suggesting that voice engagement is emerging as a high-impact, underused post-event channel.

Methodology

Objective:

To test whether conversational AI can replace passive follow-up methods (emails) with active, real-time engagement that converts webinar interest into action.

Design Overview:

-

Target group: Webinar registrants (76% with valid phone numbers)

-

Tools: AI Voice Agent (for calls), Google Sheets (tracking), Slack (alerts)

-

Baseline: Standard post-webinar follow-up email with 1:1 offer

-

Timeline: Two-phase experiment executed within 48 hours of a scheduled webinar

Phases:

-

01

Pre-Webinar Reminder Call, designed to confirm attendance and reduce no-shows

-

02

Post-Webinar Follow-Up Call, designed to drive personalized, context-aware engagement

Results

The AI Voice Agent generated measurable engagement where email produced none, despite identical messaging and timing.

Analysis & Interpretation

-

01

Voice Cuts Through Post-Event ApathyWebinar follow-up is a well-known conversion bottleneck.Most registrants don’t reply to emails, not because of disinterest, but because email is cognitively easy to ignore.

Voice, by contrast, demands presence. The conversational medium, even when AI-driven, elicited engagement that asynchronous channels couldn’t match. The 14.5% conversion rate among connected contacts shows that tone, timing, and immediacy matter more than the medium’s “human” authenticity. -

02

The Psychological Shift: From “Outreach” to “Assistance”Framing the post-webinar call as helpful rather than sales-driven fundamentally changed participant behavior. When the agent asked questions like “Would you like the recording?” or “Did you have any follow-up questions about the topic?”, it triggered reciprocity, the natural human tendency to respond to perceived helpfulness.

In other words, the AI wasn’t “selling”, it was supporting curiosity. That simple shift transformed the interaction dynamic. -

03

Timing Is the Conversion MultiplierThe calls occurred within 24 hours before and after the event, the precise window when interest peaks. Unlike email, which relies on delayed reaction, voice interactions create synchronous engagement while the topic is still top-of-mind. This suggests that voice AI could be particularly effective for moment-based marketing, replacing lagging nurture sequences with real-time touchpoints.

-

04

Simplicity Beats SophisticationThe test deliberately avoided complex integrations or CRM automation.A lightweight setup (Google Sheets + Slack) delivered measurable ROI, proving that experimentation doesn’t require full-scale implementation.

This points to a key operational insight: teams can validate new channels fast before committing to infrastructure changes. -

05

AI Sustains Energy Where Humans PlateauRepetition fatigue is a major limiter in manual follow-up. Humans naturally lose enthusiasm after the 10th identical call, AI doesn’t. Each conversation retained the same upbeat tone, which likely contributed to higher connection quality and caller satisfaction. This consistency gives AI a qualitative advantage in maintaining brand tone at scale.

Strategic Implications

Limitations

-

The sample size was limited to a single event and one audience segment (webinar registrants).

-

Metrics like satisfaction or sentiment weren’t measured quantitatively.

-

Longer-term conversion from 1:1 follow-ups to deals was not tracked.

Future studies could explore scaling across multiple webinars, varying tone and timing, or combining voice with SMS handoffs to extend engagement lifespan.

Conclusion

This experiment demonstrated that AI-powered voice touchpoints can outperform traditional email outreach by over 12× in driving immediate, human-like engagement.

By leveraging contextual, conversational timing, the Voice Agent converted passive webinar interest into active dialogue, proving that voice remains the most underutilized channel in digital marketing.

STRATEGIC RECOMMENDATIONS: Designing Human-AI Workflows That Scale

Deploy Voice AI to Qualify Large Lead Pools Automatically

-

Deploy parallel dialing for warm outbound lists, webinar registrants,and high-volume intent data sources.

-

Prioritize it when the goal is maximum reach per hour, especially whenteam bandwidth is limited.

-

Reserve power dialing for high-value accounts where reps need timeto prepare context before each call.

Replace Voicemail With an Always-On AI Receptionist

-

Implement a 24/7 inbound voice agent to answer every call, even outside business hours.

-

Use it to gather context (“What problem are you calling about?”) so human support starts each interaction with more information.

-

Add multilingual support to reduce friction in global inbound traffic.

Shift Post-Event Follow-Up From Passive to Proactive

-

Replace (or augment) webinar follow-up emails with AI-powered voice touchpoints within 24 hours of the event.

-

Use conversational logic to tailor follow-up paths for attendeesvs. no-shows.

-

Pair voice AI with lightweight tracking tools (Sheets, Slack)until volume justifies deeper automation.

Use Voice AI to Re-engage Leads Who Ignore Email

-

Trigger voice outreach after no activity on nurture sequences, abandoned signups, or unbooked demos.

-

Keep calls short (<90 seconds) and focused on one next step (book, confirm, ask question).

-

Use email/SMS to deliver links the agent mentions during the call.

Improve Pipeline Hygiene With Automated Prequalification

-

Let voice agents pre-screen leads before they ever reach sales, reducing noise and maintaining clearer signals inside the CRM.

-

Apply AI qualification logic to inbound form fills, not just outbound lists.

-

Categorize leads into: Ready, Not Now, Not Fit, and route accordingly.

Build Small Experiments Before Full Automation

-

Start with low-complexity, low-tech setups(Sheets + Slack + manual review).

-

Test hypotheses over 48–72 hours, then scale the successful patterns.

-

Measure experiments by:→ Connect rate→ Action rate (booked, qualified, escalated)→ Conversation sentiment→ Human time saved

Treat Multilingual and Context-Aware Logic as Core Requirements

-

Plan for AI conversation flows to handle language switching, casual phrasing, and off-topic replies.

-

Script graceful exits and fallback logic to ensurea human-feeling end-to-call experience.

-

Continuously refine prompts using real conversation transcripts.

Blend Humans and AI in a Hybrid Motion

-

Let AI handle first-touch, repetitive, and time-sensitive interactions.

-

Let humans take over complex, high-emotion, high-value conversations.

-

Use AI to prepare reps with context summaries before the handoff.

Reinvest Saved Time Into Higher-Value Activities

-

Free SDR hours should shift toward:

→ Target account research

→ Personalized outreach for strategic prospects

→ Handling qualified inbound

→ Creating content from real customer feedback -

Rebalance team KPIs to reflect conversation quality, not just activity count.

Future Outlook

As voice AI becomes more deeply embedded in sales and CX workflows, the next frontier will involve greater contextual intelligence and emotional awareness. Advancements in real-time sentiment detection, tone matching, and intent modeling will enable AI to adjust its behavior dynamically, shifting from purely informational responses to interactions that feel increasingly supportive, adaptive, and human-attuned.

Combined with multilingual fluency and seamless mid-conversation switching, AI voice systems will broaden accessibility and meet the expectations of global audiences who engage across languages, time zones, and communication styles.

At the same time, sales organizations will move toward hybrid human-AI workflows rather than fully automated experiences. AI will handle volume, timing, and triage; humans will take over when nuance, negotiation, or trust-building is required.

We expect future teams to operate with AI as an embedded collaborator, initiating calls, prequalifying leads, preparing context summaries, and even running first-touch discovery. The momentum seen in these early experiments suggests that the biggest efficiency gains will come from orchestrating the handoff between AI and people, not choosing one over the other.

“The insight isn’t that AI replaces the human touch—it’s that it extends it.AI made it possible to reach more people, hold more conversations,and unlock more opportunities than would have ever been possible manually.”

Appendix: Methodology & Data Notes

A. Tools & Systems Used

-

AI Voice Agent Platform:Used for outbound qualification, inbound routing, and webinar follow-up.

-

Dialer System: Power Dialer (1 line) and Parallel Dialer (3 lines).

-

Data Sources:

→ Internal lead lists

→ CRM-exported long-tail segments

→ Website inbound call logs

→ Webinar registration database -

Lightweight Ops Tools:→ Google Sheets (tracking + tagging)

→ Slack (alerts + handoff notifications)

→ Screaming Frog (website content extraction for knowledge base creation)

→ Manual quality review of AI transcripts

B. Sample Sizes & Experiment Windows

-

01

Long-Tail Voice Agent QualificationTotal leads: 4,073

Segments: 1–2 seat trials, low-intent sources, out-of-region

Duration: Two one-hour calling blocks

No prior human outreach -

02

Inbound AI ReceptionistSample: All inbound calls to website and campaign numbers

Languages supported: Multi-language (with automatic switching)

Duration: Ongoing deployment window

-

01

Webinar Follow-Up (Voice vs Email)Registrants: 100% webinar signups with valid numbers (76%)

Duration: 48-hour window (24h pre-webinar + 24h post-webinar)

Baseline: Standard follow-up email

C. Definitions

-

Connect Rate: % of dial attempts resulting in a live conversation.

-

Conversation: Any verified two-way interaction lasting >10 seconds.

-

Demo Booked: Directly scheduled meeting with a sales representative.

-

Prequalified Lead: Lead providing enough information to categorize readiness or fit.

-

MQL (Marketing Qualified Lead): Prequalified contact showing interest and meetingbasic criteria.

-

SQL (Sales Qualified Lead): Lead showing intent or urgency and meeting sales-ready criteria.

D. Limitations

-

Experiments were intentionally small-scale, designed for directional insight rather than statistical certainty.

-

Results may vary across industries, markets, and rep experience levels.

-

Long-term conversion outcomes (pipeline-to-revenue) were not includedin these short-cycle tests.

-

Inbound AI sentiment accuracy and multilingual recognition may differ depending on accents, noise levels, or phrasing.

-

Email-based benchmarks reflect single-touch follow-up, not full campaign nurture.

E. Next Steps for Validation

-

Expand each experiment to larger datasets to verify repeatability.

-

Introduce CRM-integrated automation for deeper attribution tracking.

-

Test additional languages, accents, and conversational complexityin inbound and follow-up flows.

-

Compare different AI prompt styles to measure impact on tone, clarity, and conversion.

-

Run A/B tests across industries or lead segments to identify whereAI has the strongest leverage.